Linux のページ回収の閾値である wmark_min、wmark_low、wmark_high の算出式を調べたメモ。

算出式

正確には NUMA ノードの ZONE 毎に計算されるが、合計の概算は下記の式で計算できる。

min_free_kbytes = sqrt(物理メモリサイズ(KB) * 16) wmark_min = min_free_kbytes wmark_low = wmark_min + (wmark_min / 4) wmark_high = wmark_min + (wmark_min / 2)

具体例

例えば、x86_64 で物理メモリサイズが 16GB の場合、以下のようになる。

min_free_kbytes = sqrt(16,777,216KB * 16) = 16,384 KB wmark_min = 16,384 KB wmark_low = 16,384 + (16,384 / 4) = 20,480 KB wmark_high = 16,384 + (16,384 / 2) = 24,576 KB

実機確認結果

手元の環境で確認してみた → How to check usage of different parts of memory?

$ uname -r 2.6.39-400.17.1.el6uek.x86_64 ★ Kernel 2.6.39-400 では managed_pages ではなく present_pages を算出に使っている $ cat /proc/meminfo|head -1 MemTotal: 16158544 kB ★ 物理メモリサイズ $ sysctl -a|grep min_free_kbytes vm.min_free_kbytes = 16114 ★ min_free_kbytes = 16,114 KB $ cat /proc/zoneinfo Node 0, zone DMA pages free 3976 min 3 ★ min_pages = 3 * 4KB = 12 KB low 3 ★ low_pages = 3 * 4KB = 12 KB high 4 ★ high_pages = 4 * 4KB = 16 KB scanned 0 spanned 4080 present 3920 ★ ... Node 0, zone DMA32 pages free 327938 min 822 ★ min_pages = 822 * 4KB = 3,288 KB low 1027 ★ low_pages = 1027 * 4KB = 4,108 KB high 1233 ★ high_pages = 1027 * 4KB = 4,932 KB scanned 0 spanned 1044480 present 828008 ★ ... Node 0, zone Normal pages free 3994 min 3202 ★ min_pages = 3202 * 4KB = 12,808 KB low 4002 ★ low_pages = 4002 * 4KB = 16,008 KB high 4803 ★ high_pages = 4803 * 4KB = 19,212 KB scanned 0 spanned 3270144 present 3225435 ★

DMA、DMA32、Noraml の3つの ZONE の min、low、high を合計すると以下の通り。

min: 16,108 KB (12 + 3,288 + 12,808) low: 20,128 KB (12 + 4,108 + 16,008) high: 24,160 KB (16 + 4,932 + 19,212)

上記のように実際にはシステム全体ではなく NUMA ノード毎、さらに ZONE(x86_64 の場合、DMA、DMA32、Normal) 毎に閾値があり、各 ZONE 単位で閾値を下回るとページ回収が行われるようです。

正確にNUMAノードのZONE毎の閾値を見積もるにはがちゃぴん先生のツッコミ通り、もう少し複雑な計算式になります。

@yoheia @hasegaw なかなか返事できなくてごめんなさい。WMARK_MIN 算出するときの

tmp = (u64)pages_min * zone->managed_pages;

do_div(tmp, lowmem_pages);

の2行が抜けてない?

— がちゃぴん先生 (@kosaki55tea) 2015, 10月 28前提知識

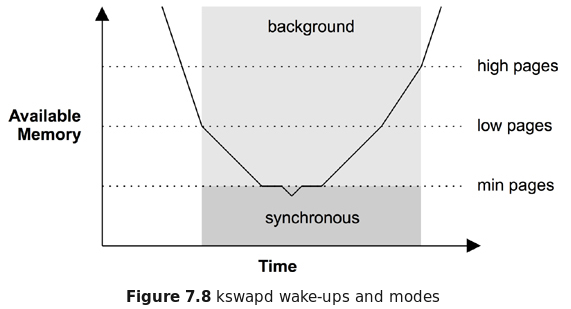

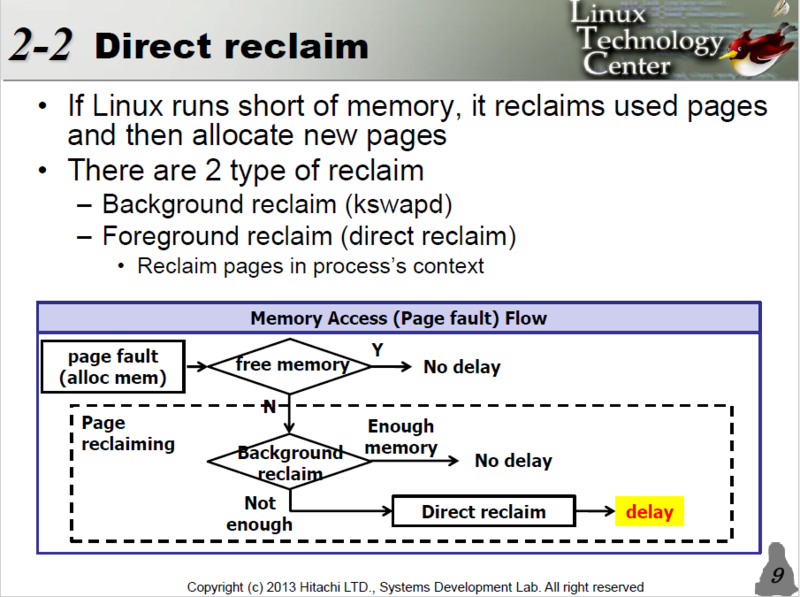

Linux*1 は空きメモリを有効活用し、ファイル*2を読み書きするとメモリにキャッシュ*3するが、空きが少なくなるとページを回収して空きを確保する。LRU リストを基に使用頻度が低いページが解放される*4。

ページ回収(reclaim)の種類と動作

- Background reclaim

- 空きメモリが wmark_low(low pages) 未満になるとカーネルスレッド kswapd がバックラウンドでページ回収を初める。

- 空きメモリが wmark_high(high pages) を超えると kswapd はページ回収をやめる。

- Direct reclaim

- 空きメモリが wmark_min(min pages) 未満になるとプロセスがメモリを割当を要求するとページ回収して空きを作ってから割当られる。

Systems Performance: Enterprise and the Cloud Figure - 7.8 kswapd wake-ups and modes より

Reducing Memory Access Latency より

参考情報

Professional Linux Kernel Architecture (Wrox Programmer to Programmer)

- 作者: Wolfgang Mauerer

- 出版社/メーカー: Wrox

- 発売日: 2008/10/13

- メディア: ペーパーバック

- 購入: 2人 クリック: 40回

- この商品を含むブログ (4件) を見る

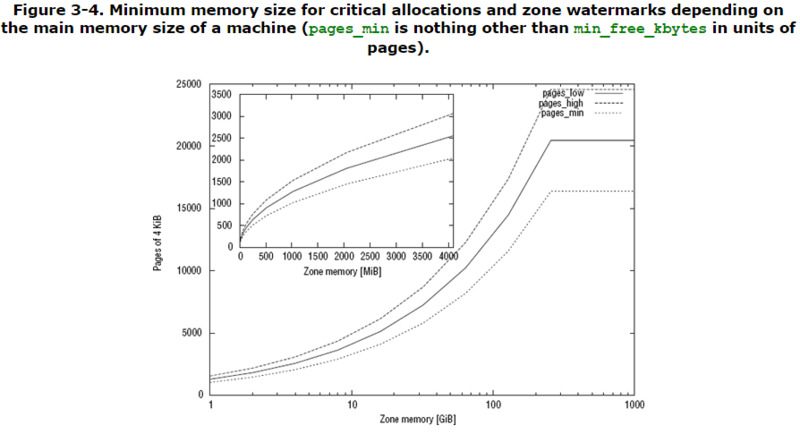

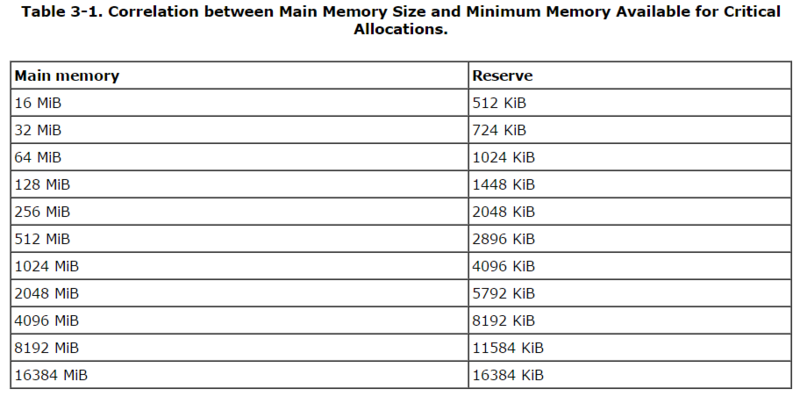

Before calculating the various watermarks, the kernel first determines the minimum memory space that must remain free for critical allocations. This value scales nonlinearly with the size of the available RAM. It is stored in the global variable min_free_kbytes. Figure 3.4 provides an overview of the scaling behavior, and the inset — which does not use a logarithmic scale for the main memory size in contrast to the main graph — shows a magnification of the region up to 4 GiB. Some exemplary values to provide a feeling for the situation on systems with modest memory that are common in desktop environments are collected in Table 3.1. An invariant is that not less than 128 KiB but not more than 64 MiB may be used. Note, however, that the upper bound is only necessary on machines equipped with a really satisfactory amount of main memory. The file /proc/sys/vm/min_free_kbytes allows reading and adapting the value from userland.

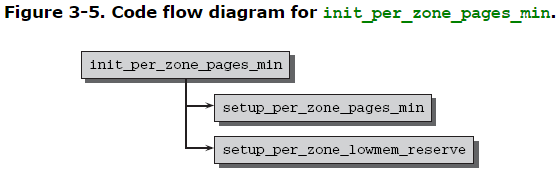

Filling the watermarks in the data structure is handled by init_per_zone_pages_min, which is invoked during kernel boot and need not be started explicitly.

setup_per_zone_pages_min sets the pages_min, pages_low, and pages_high elements of struct zone. After the total number of pages outside the highmem zone has been calculated (and stored in lowmem_ pages), the kernel iterates over all zones in the system and performs the following calculation:

- mm/page_alloc.c

void setup_per_zone_pages_min(void) { unsigned long pages_min = min_free_kbytes >> (PAGE_SHIFT - 10); unsigned long lowmem_pages = 0; struct zone *zone; unsigned long flags; ... for_each_zone(zone) { u64 tmp; tmp = (u64)pages_min * zone->present_pages; do_div(tmp,lowmem_pages); if (is_highmem(zone)) { int min_pages; min_pages = zone->present_pages / 1024; if (min_pages < SWAP_CLUSTER_MAX) min_pages = SWAP_CLUSTER_MAX; if (min_pages > 128) min_pages = 128; zone->pages_min = min_pages; } else { zone->pages_min = tmp; } zone->pages_low = zone->pages_min + (tmp >> 2); zone->pages_high = zone->pages_min + (tmp >> 1); } }

static void __setup_per_zone_wmarks(void) { unsigned long pages_min = min_free_kbytes >> (PAGE_SHIFT - 10); unsigned long lowmem_pages = 0; struct zone *zone; unsigned long flags; /* Calculate total number of !ZONE_HIGHMEM pages */ for_each_zone(zone) { if (!is_highmem(zone)) lowmem_pages += zone->managed_pages; } for_each_zone(zone) { u64 tmp; spin_lock_irqsave(&zone->lock, flags); tmp = (u64)pages_min * zone->managed_pages; do_div(tmp, lowmem_pages); if (is_highmem(zone)) { /* * __GFP_HIGH and PF_MEMALLOC allocations usually don't * need highmem pages, so cap pages_min to a small * value here. * * The WMARK_HIGH-WMARK_LOW and (WMARK_LOW-WMARK_MIN) * deltas controls asynch page reclaim, and so should * not be capped for highmem. */ unsigned long min_pages; min_pages = zone->managed_pages / 1024; min_pages = clamp(min_pages, SWAP_CLUSTER_MAX, 128UL); zone->watermark[WMARK_MIN] = min_pages; } else { /* * If it's a lowmem zone, reserve a number of pages * proportionate to the zone's size. */ zone->watermark[WMARK_MIN] = tmp; } zone->watermark[WMARK_LOW] = min_wmark_pages(zone) + (tmp >> 2); zone->watermark[WMARK_HIGH] = min_wmark_pages(zone) + (tmp >> 1); setup_zone_migrate_reserve(zone); spin_unlock_irqrestore(&zone->lock, flags); } /* update totalreserve_pages */ calculate_totalreserve_pages(); } ... /* * Initialise min_free_kbytes. * * For small machines we want it small (128k min). For large machines * we want it large (64MB max). But it is not linear, because network * bandwidth does not increase linearly with machine size. We use * * min_free_kbytes = 4 * sqrt(lowmem_kbytes), for better accuracy: ★ * min_free_kbytes = sqrt(lowmem_kbytes * 16) ★ * * which yields * * 16MB: 512k * 32MB: 724k * 64MB: 1024k * 128MB: 1448k * 256MB: 2048k * 512MB: 2896k * 1024MB: 4096k * 2048MB: 5792k * 4096MB: 8192k * 8192MB: 11584k * 16384MB: 16384k */ int __meminit init_per_zone_wmark_min(void) { unsigned long lowmem_kbytes; lowmem_kbytes = nr_free_buffer_pages() * (PAGE_SIZE >> 10); min_free_kbytes = int_sqrt(lowmem_kbytes * 16); if (min_free_kbytes < 128) min_free_kbytes = 128; if (min_free_kbytes > 65536) min_free_kbytes = 65536; setup_per_zone_wmarks(); refresh_zone_stat_thresholds(); setup_per_zone_lowmem_reserve(); setup_per_zone_inactive_ratio(); return 0; } module_init(init_per_zone_wmark_min)

This is an example of the problem and solution above.

- System Memory: 2GB

- High memory pressure

In this case, min_free_kbytes and watermarks are automatically

set as follows.

(Here, watermark shows sum of the each zone's watermark.)min_free_kbytes: 5752

Tunable watermark [LWN.net]

watermark[min] : 5752

watermark[low] : 7190

watermark[high]: 8628

min_free_kbytes:

This is used to force the Linux VM to keep a minimum number of kilobytes free. The VM uses this number to compute a watermark[WMARK_MIN] value for each lowmem zone in the system. Each lowmem zone gets a number of reserved free pages based proportionally on its size.

Some minimal amount of memory is needed to satisfy PF_MEMALLOC allocations; if you set this to lower than 1024KB, your system will become subtly broken, and prone to deadlock under high loads.

Setting this too high will OOM your machine instantly.

vm.txt\sysctl\Documentation - kernel/git/stable/linux.git - Linux kernel stable tree