Glue PySpark でダブルクオートなしタブ区切りで CSV に出力する - ablog で Step Functions からパラメータを渡すパティーン。

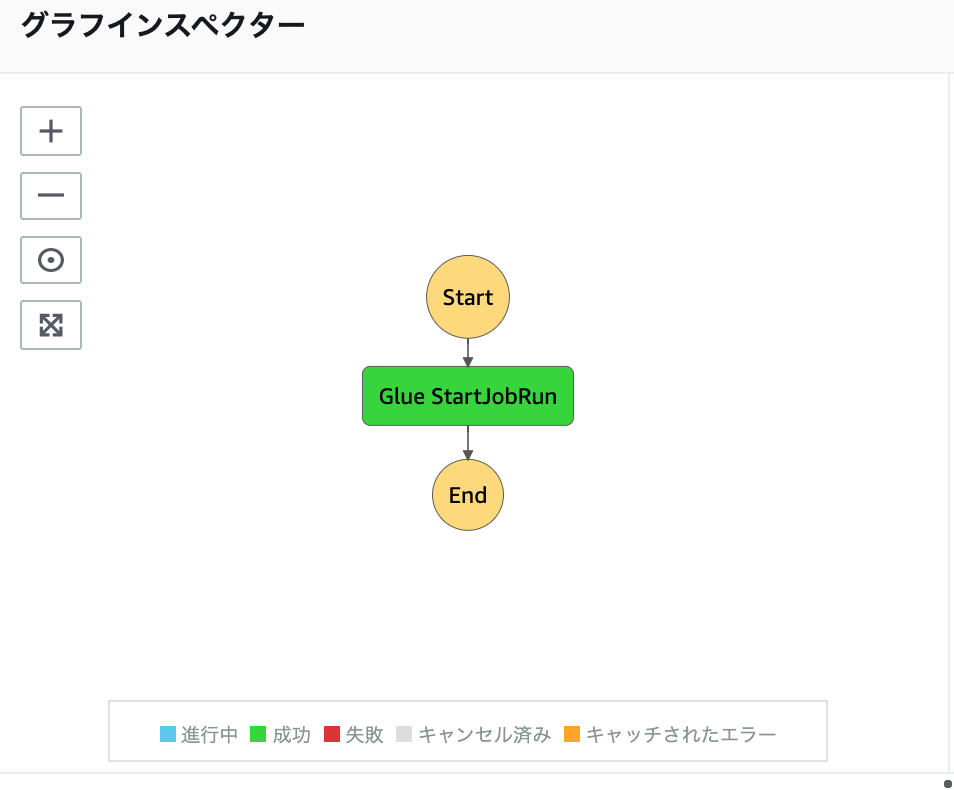

- Step Functions のステートマシン

{ "Comment": "This is your state machine", "StartAt": "Glue StartJobRun", "States": { "Glue StartJobRun": { "Type": "Task", "Resource": "arn:aws:states:::glue:startJobRun.sync", "Parameters": { "JobName": "no_quote_test", "Arguments": { "--QUOTE_CHAR": "-1", "--SEPARATOR": "\t" } }, "End": true } } }

- Glue Spark ジョブで引数を受け取る

## @params: [JOB_NAME] args = getResolvedOptions(sys.argv, ['JOB_NAME', 'QUOTE_CHAR', 'SEPARATOR']) # ココ sc = SparkContext() glueContext = GlueContext(sc) spark = glueContext.spark_session job = Job(glueContext) job.init(args['JOB_NAME'], args) strQuoteChar = args['QUOTE_CHAR'] # ココ strSeparator = args['SEPARATOR']# ココ (中略) datasink2 = glueContext.write_dynamic_frame.from_options( frame = applymapping1, connection_type = "s3", connection_options = {"path": "s3://dl-sfdc-dm/test/no_quote_test"}, format = "csv", format_options={ "quoteChar": strQuoteChar, "separator": strSeparator }, transformation_ctx = "datasink2" )

コード全量

import sys from awsglue.transforms import * from awsglue.utils import getResolvedOptions from pyspark.context import SparkContext from awsglue.context import GlueContext from awsglue.job import Job ## @params: [JOB_NAME] args = getResolvedOptions(sys.argv, ['JOB_NAME', 'QUOTE_CHAR', 'SEPARATOR']) sc = SparkContext() glueContext = GlueContext(sc) spark = glueContext.spark_session job = Job(glueContext) job.init(args['JOB_NAME'], args) strQuoteChar = args['QUOTE_CHAR'] # ココ strSeparator = args['SEPARATOR'] # ココ #strQuoteChar = -1 #strSeparator = "\t" ## @type: DataSource ## @args: [database = "default", table_name = "no_quote_test", transformation_ctx = "datasource0"] ## @return: datasource0 ## @inputs: [] datasource0 = glueContext.create_dynamic_frame.from_catalog(database = "default", table_name = "no_quote_test", transformation_ctx = "datasource0") ## @type: ApplyMapping ## @args: [mapping = [("col0", "string", "col0", "string"), ("col1", "string", "col1", "string"), ("col2", "string", "col2", "string"), ("col3", "string", "col3", "string")], transformation_ctx = "applymapping1"] ## @return: applymapping1 ## @inputs: [frame = datasource0] applymapping1 = ApplyMapping.apply(frame = datasource0, mappings = [("col0", "string", "col0", "string"), ("col1", "string", "col1", "string"), ("col2", "string", "col2", "string"), ("col3", "string", "col3", "string")], transformation_ctx = "applymapping1") ## @type: DataSink ## @args: [connection_type = "s3", connection_options = {"path": "s3://dl-sfdc-dm/test/no_quote_test"}, format = "csv", transformation_ctx = "datasink2"] ## @return: datasink2 ## @inputs: [frame = applymapping1] datasink2 = glueContext.write_dynamic_frame.from_options(frame = applymapping1, connection_type = "s3", connection_options = {"path": "s3://dl-sfdc-dm/test/no_quote_test"}, format = "csv", format_options={"quoteChar": strQuoteChar, "separator": strSeparator }, transformation_ctx = "datasink2") job.commit()